The Street View Sequences (SVS) dataset is for urban and suburban place recognition from image sequences. The dataset consists of 6000 km (1500 km x 4) of street coverage and more tahn 700.000 Google Street View images. The images are taken from different viewpoints and at different times, between 2007 and 2018, in 10 major cities around the globe. The images are accurately labeled with their ground-truth geo-location. The dataset covers several challenges for place recognition; such as viewpoint, seasonal, weather, dynamic and structural changes. Its extent, the largest up to date in several metrics, makes it a relevant resource for training deep neural networks. The images were gathered from Google Street View, and hence they are suited for autonomous car research.

The video below gives some examples of sequences from the dataset. The video explains the content of the dataset and how it is structured. Furthermore, the video tries to justify why lifelong place recognition is still a challenging problem by giving examples of places that has undergone significant perceptual changes. Would you be able to recognise that the images show the same place?

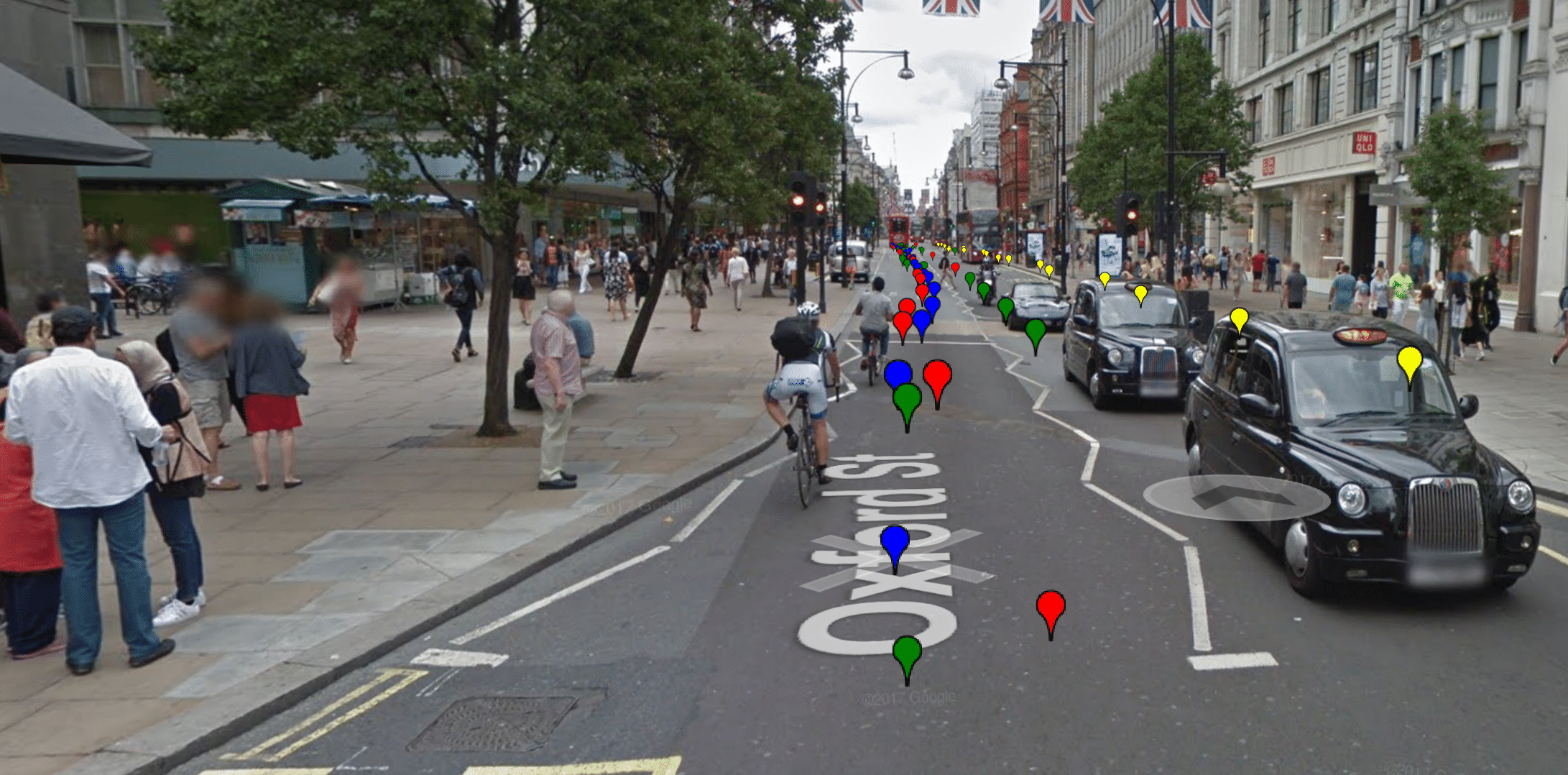

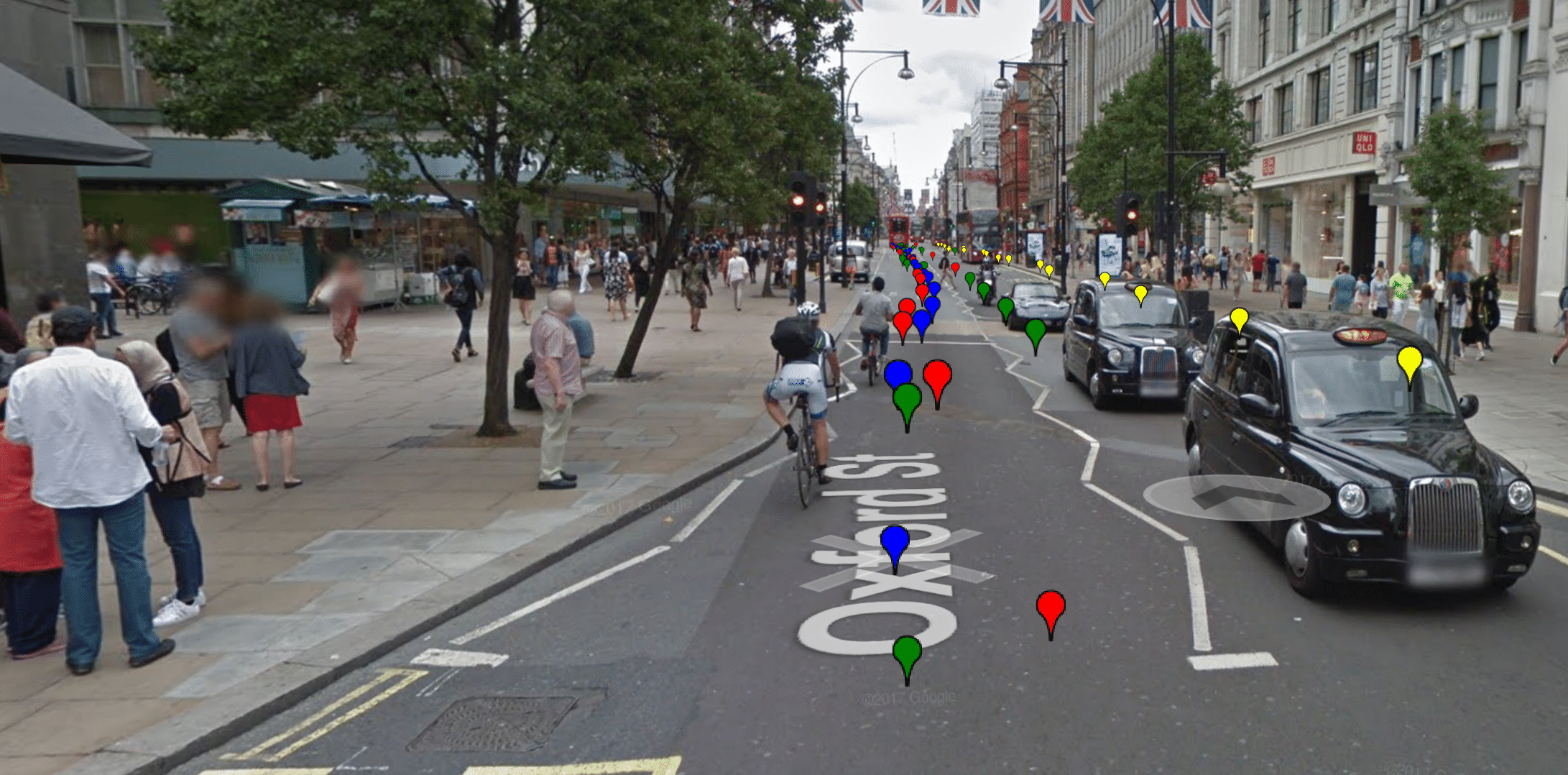

The images below show how different places can appear. The examples demonstrate that the SVS dataset includes seasonal changes, different weather conditions, dynamic changes, structural changes and viewpoint changes. Furthermore, the diverse enviroment from the 10 chosen cities ensures high generalization.

We have divided the full dataset into 10 smaller datasets based on where the data stems from. These can be downloaded on the links below. We also provided a link for downloading all the data at once.

All datasets and benchmarks on this page are copyright by us and published under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License. This means that you must attribute the work in the manner specified by the authors, you may not use this work for commercial purposes and if you alter, transform, or build upon this work, you may distribute the resulting work only under the same license.

All datasets and benchmarks on this page are copyright by us and published under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License. This means that you must attribute the work in the manner specified by the authors, you may not use this work for commercial purposes and if you alter, transform, or build upon this work, you may distribute the resulting work only under the same license.